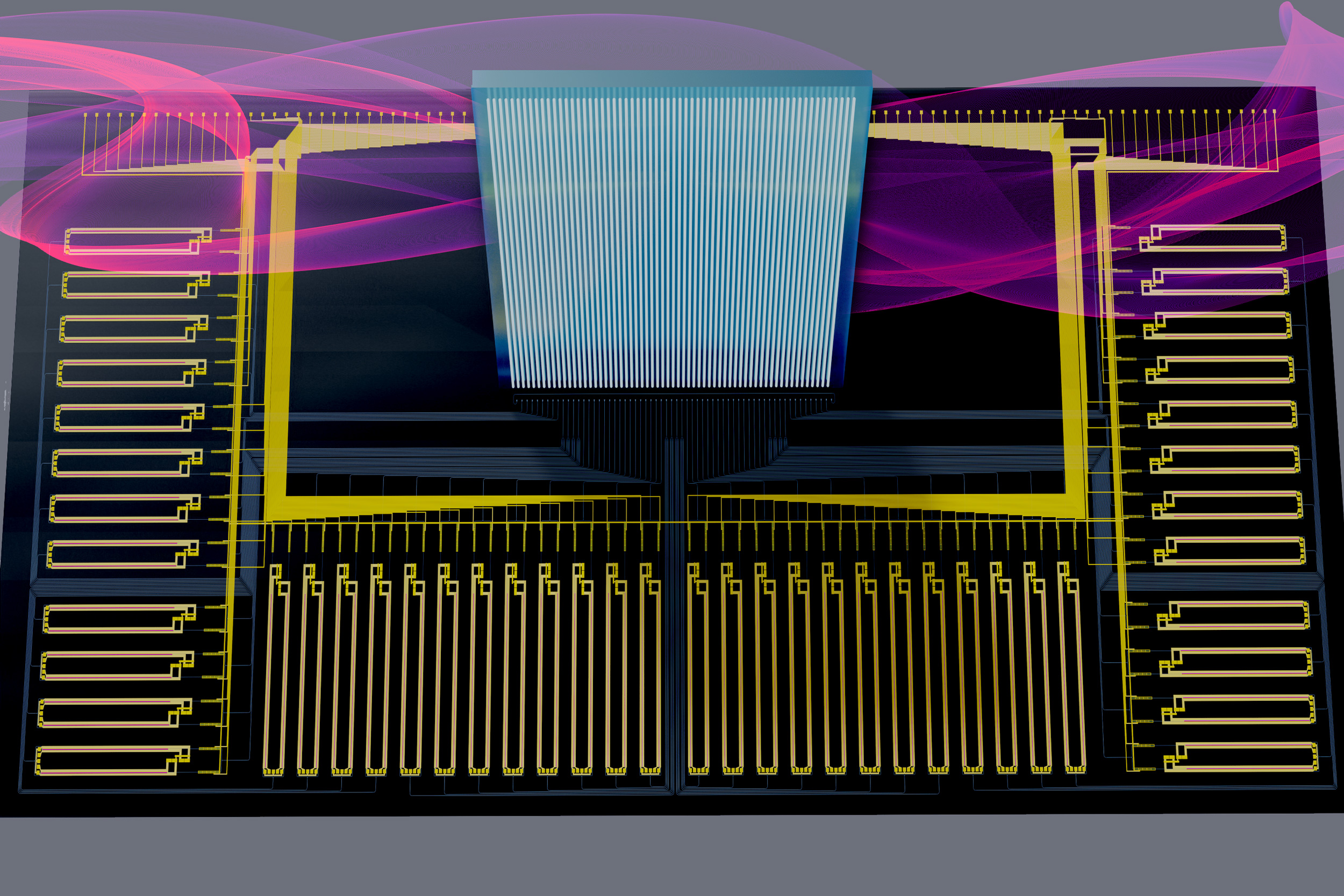

New techniques efficiently accelerate sparse tensors for massive AI models

Researchers from MIT and NVIDIA have developed two techniques that accelerate the processing of sparse tensors, a type of data structure that’s used for high-performance computing tasks. The complementary techniques could result in significant improvements to the performance and energy-efficiency …