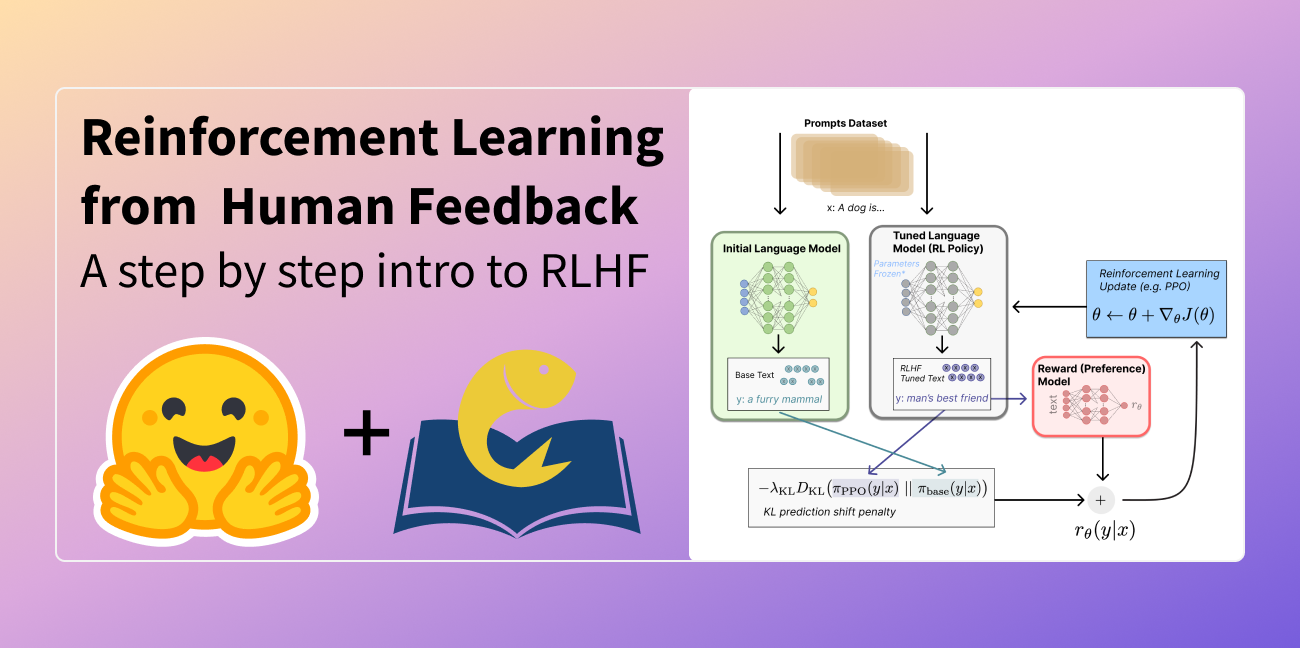

What is Reinforcement Learning From Human Feedback (RLHF)

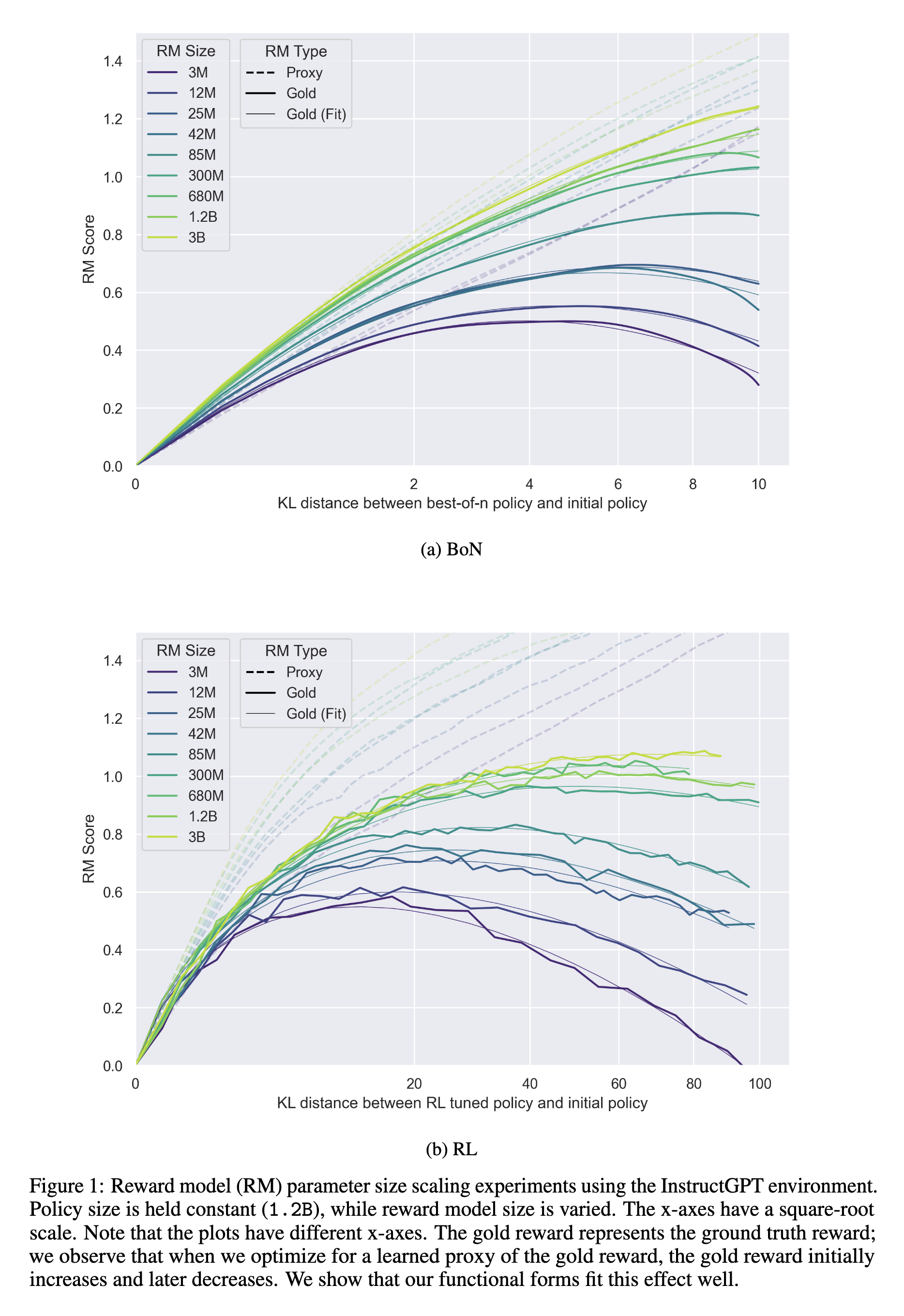

In the constantly evolving world of artificial intelligence (AI), Reinforcement Learning From Human Feedback (RLHF) is a groundbreaking technique that has been used to develop advanced language models like ChatGPT and GPT-4. In this blog post, we will dive into …