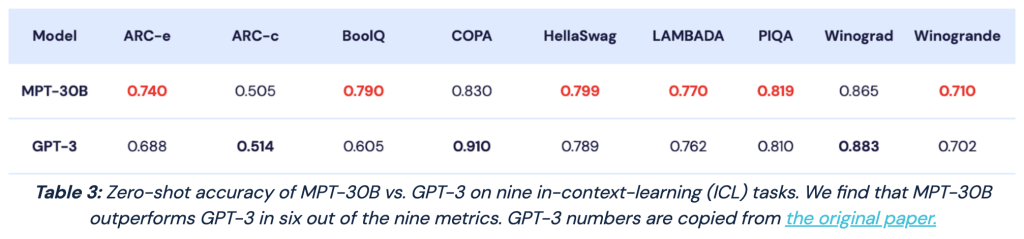

MosaicML’s latest models outperform GPT-3 with just 30B parameters

Open-source LLM provider MosaicML has announced the release of its most advanced models to date, the MPT-30B Base, Instruct, and Chat.

These state-of-the-art models have been trained on the MosaicML Platform using NVIDIA’s latest-generation H100 accelerators and claim to offer …